New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

proposal: add container/queue #27935

Comments

|

Unbound queues don't fit well machines with finite memory. |

|

@cznic I get your point, but I disagree with it for a number of reasons.

Maybe the term "unbounded" is a bit confusing. Maybe we should change from "unbounded queue" to "dynamically growing queue". Dynamically growing, which in the queue scenario means the same thing as unbounded, would probably be less confusing. Thoughts? |

|

Agreed that the stdlib or golang.org/x repo would benefit from containers like queue and btree. It may become easier to land new containers once generics lands, tho that's a long way off yet. Dynamic or "elastic" channel buffers have been proposed (and declined) in two forms: a channel buffer pool #20868, and unlimited channel buffer #20352. A workable alternative is one-goroutine-plus-two-channels as in this elastic channel example. It might be useful to include that in your benchmark set. Container structures are typically unbounded/elastic, so that's unlikely to be a recurring objection here :-) |

|

@networkimprov, thanks for posting the other channel proposals, and agreeing with the queue proposal! :-) I looked into https://github.com/kylelemons/iq and both queue designs implemented here, a slice, ring based design as well as the dynamic channel. I have benchmarked two high quality open source, similarly slice ring based implementations: phf and gammazero. I have also benchmarked channels as queue. Impl7 is dramatically faster than all ring slice based implementations tested as well as channels. Having said that, I tried to benchmark both implementations, but the design for both queues is so "unique" I had trouble getting both implementations to fit my benchmark tests. I couldn't help but to notice the "Infinite Queue implementations in Go. These are bad news, you should always use bounded queues, but they are here for educational purposes." title on the repo. Blanket statements like this that are based on faulty research really doesn't help. I wish the author would do a better job researching other design and implementation options before making such statements. |

The above is a blanket statement because it claims some undefined research is faulty without providing any evidence ;-) |

|

Thanks for noticing my queue. I obviously agree that a queue should be in the standard library. Personally I believe that a deque is what you want in the standard library. It's usually not much more work to implement (or much more complex code-wise) but it's twice as useful and not (in principle) harder to make fast. The proposal sounds good and I wish it success. Oh, and congrats on beating my queue! :-) |

|

Nice queue! However, my feeling is that the state of affairs of containers and the standard library is such that a global assessment of what the standard library should provide for containers before considering inclusion based on the merits of a single implementation of a single data structure. |

|

Re benchmarking kylelemons/iq, its two channels simulate a single standard channel (one is input, the other output). But you already knew that, so the issue must be the goroutine? Re its claim, "you should always use bounded queues," that means channel queues. As channels are principally an "IGrC" mechanism, if the consumer goroutine stops, the producer/s must block on I gather the API isn't thread safe? Have you benchmarked a concurrent scenario, e.g. multiple pushers & single popper? That's where a comparison with kylelemons/iq is most relevant. (And that's how I'd use your queue.) Related: @ianlancetaylor once voiced support for the idea of allocating a bounded channel buffer as-needed, and your list-of-slices technique might apply there. There's not a separate proposal for that as yet. |

|

I remember https://github.com/karalabe/cookiejar/tree/master/collections having a decent implementation. Good concurrent queue will need several things i.e. lock-freedom in the happy bath, non-spinning when blocking. For different concurrent situations (MPMC, SPMC, MPSC, SPSC) and high-performance, the code would also look different; since not having to manage concurrency at a particular producer/consumer helps to speed up things. As for tests you should also test the slowly increasing cases (i.e. 2 x Push, 1 x Pop or 3 x Push, 2 x Pop) and slowly decreasing cases (i.e. fill queue, then 1 x Push, 2 x Pop or 2 x Push, 3 x Pop). Also this document is not including the stable-cases (fill 1e6, or larger depending on internal block size, then repeat for N 1 x Push, 2 x Pop). Also missing large cases with 10e6 items. And average might not be the best measurement for these, look at percentiles also. |

I believe that the two-array deque is conventional in language libraries. It tends to provide better cache behavior than a linked-list approach, and a lower cost (particularly in the face of mixed push-front/push-back usage) than a single-array queue. |

“Lock-freedom” is not particularly meaningful for programs that run on multiple cores. The CPU's cache coherence protocol has many of the same properties as a lock. |

|

As @cznic notes, unbounded queues are not usually what you want in a well-behaved program anyway. For example, in a Logger implementation, you don't want to consume arbitrary memory and crash (and lose your pending logs) if one of your logging backends can't keep up. If the logs are important, it's better to apply flow-control and delay the program until they can be sent. If the logs are not important, it's better to drop some logs when the buffer gets full, rather than dropping the entire program state. |

|

Given the number and variety of queue implementations that already exist, and their variation on properties such as push-front support and concurrency-safety, I would prefer that we not add a queue to the standard library — especially not before we figure out what's going on with generics (#15292). |

|

I agree that when used for concurrency, unbounded queues are a bad fit. However, unbounded queues are useful for sequential work e.g. when doing a breadth first search. We use slices that can grow arbitrarily, maps that can grow arbitrarily, and stacks that can grow arbitrarily, why can't the programmer be trusted to use them when appropriate? I don't understand what the push back is on the idea (though I agree that it should wait on generics). An unbounded queue is not an unbounded channel. |

|

Thanks @egonelbre for pointing out to this https://github.com/karalabe/cookiejar/blob/master/collections/queue/queue.go I have added this queue to the bench tests. Feel free to clone the repo and run the tests to validate below results by yourself. This queue does have good performance for fairly large data sets, but its performance for small data sets, performance and memory wise, are not very good. This is a very good implementation, I really like it, but:

|

|

@networkimprov the kylelemons/iq is a very unique design which seems to me was built to prove a point the channels does not make good unbounded queues. I can't agree more with this. Channels are meant for routine synchronization, not as a sequential data store like a traditional queue can, which by the way, is a core use case for queues (if you can't process fast enough, store). That's why I was actually adamant in including buffered channels in the tests: they are not meant to be used as queues. If people really want to use them as queues, then a separate proposal with a separate discussion should follow. This is not a "dynamic channel" proposal. Please keep that in mind. Regarding the claim, "you should always use bounded queues,", I understood (by reading the code) that he/she meant channels. My point was that his/her statement is not clear and bounded enough, so people reading might think no unbounded queues, regardless of its design, implementation, performance, etc are good. I can't agree with that. Impl7 is not safe for concurrent use. As the map and all stdlib containers are not safe for concurrent use, the new queue should follow on the pattern. Not all users will need a safe for concurrent use queue. So IMO, a safe for concurrent use version is a topic for another proposal/discussion just like we have a separate sync.Map for a safe for concurrent use map implementation. I do talk a bit about the subject on the design document though. |

That's sort of the plan. Take a look at this response. I can't apply flow control all the way to the clients. This would make the hosting system slower, affecting its clients (the human beings). Non-critical infrastructure such as logging should not affect the hosting system significantly. This is a problem that has no solution other than to either queue or discard the logs. Discard should be the very last approach, only used when queueing is no longer possible. FWIW, CloudLogger already treats important logs in a very different way than regular logs, so even if one of the backend servers dies and a whole bunch of logs get lost, that's by design. All the important logs are not long term cached and will not be lost (in large numbers, at least). Also I get your concerns around using too much memory, but think of this queue as a data structure that is highly efficient also in dealing with large amounts of data, gigabytes of data. It has to be unbounded because each device has a certain amount of available memory to be used at any given time. It should be up to the engineer using the queue to decide how much memory he/she wants to use. It's the job of the language designers to provide tools that allow the engineers to handle their needs with the maximum possible efficient, and not dictate how much memory their programs should use. If we do have and can provide to all Go engineers a data structure that is flexible enough they know they will get really good performance no matter their needs, I think that data structure brings real value. Go engineers have been building their queues for a long time now. Often with (pretty bad results)[https://github.com/christianrpetrin/queue-tests/blob/master/bench_queue.md]. That's also my point here. Go engineers are already doing "the bad" thing of using a lot of memory. The proposed solution just allows them to keep doing that in a much more efficient way. Again, I'm not proposing a super crazy, specialized data structure here. Coming from Java and C#, I was super surprised Go doesn't offer a traditional queue implementation. Having a traditional queue implementation, especially a super flexible and fast one, also helps bring all those Java, C#, etc devs to the Go world. |

@bcmills please consider below.

|

|

Slices can be used as queues, but often they're not. Sometimes a program collects items and sort them, for example. A bounded slice is not helpful in this case. If the sorting requires more memory than available, there's no other option, in the first approximation, than to crash. Maps serve a different purpose, but the argument about bound/unbound is the same. There are many other general data structures with different big-O characteristics of their particular operations. One usually carefully selects the proper one and the argument about bound/unbound is still the same. Channels typically serve as a queue between concurrently executing producers and consumers. They have a limit that blocks producers when reached and provides useful back pressure. Making a channel unlimited loses that property. A non-channel queue (FIFO) offers a rather limited set of operations: Put and Get (or whatever the names were chosen). If it is to be used in the scenario where a slice, map or other generic data structure can be used, then there's no real need to have or use it. The remaining scenarios for using a FIFO are IMO mostly - if not only - where the producers and consumers execute concurrently. If a FIFO is used effectively as a channel there are two important questions to answer:

Also note that slices and maps that exist today in the language are type generic. The proposed unbound queue seems to use |

See https://golang.org/cl/94137 (which I ought to dust off and submit one of these days). |

|

Also to help validate my point of the whole bunch of problematic Go queues out there, I was able to validate the cookiejar queue actually has a memory leak. Just ran some bench tests locally with large items added. The memory growth is not linear to the growth of work. Also the last 1bi test took so long (5+ minutes), I had to abort the test. Impl7 doesn't suffer from these problems. I would most certainly not recommend cookiejar queue implementation until these issues are fixed. |

|

This is a worthy proposal, but, since generics are an active topic of discussion (https://go.googlesource.com/proposal/+/master/design/go2draft.md), I agree with @bcmills that we should not pursue this further until that discussion has settled. It will inevitably affect the API, and possibly the implementation, of any new container types in the standard library. |

|

Let me add that a queue implementation is just as good outside the standard library as in. There is no rush to adding queues to the standard library; people can use them effectively as an external package. |

Go philosophy takes a "when in doubt, leave it out" stance, so some ppl thumb-down stuff they hardly/never use. For example, notice the thumbs on this proposal to drop complex number support: #19921. From what I've read, the maintainers don't make decisions based on thumb-votes. |

|

@ianlancetaylor agree the generics discussion should move forward. My only concern is how much longer will it take until a final decision is made and deployed? There's a clear need of a general purpose queue in the Go stdlib. Otherwise we wouldn't see so many open source queue implementations out there. Can we get some sort of timeline on when the decision on generics will be made? Also agree a queue could be deployed to any open source repo, but please read the background piece of my proposal to help you understand why I think there's a serious need for a high perf, issue free queue in the Go stdlib. To highlight the point: Using external packages has to be done with great care. Packages on the stdlib, however, are supposed to be really well tested and performatic, as they are validated by the whole Go community. A decision to use a stdlib package is an easy one, but to use an open source, not well disseminated implementation is much more complicated decision to make. The other big problem is how does people find out what are the best, safe to use queue implementations? As pointed by @egonelbre, this one looks like a really interesting queue, but it has hidden memory leaks. Most Go engineers doesn't have the time to validate and properly probe these queues. That's the biggest value we can provide to the whole community: a safe, performant and very easy queue to be used. |

|

@ianlancetaylor how about landing a queue in golang.org/x in the interim? |

|

There is no specific timeline for generics support. The argument about what should be in the standard library is a large one that should not take place on this issue. I'll just mention the FAQ: https://golang.org/doc/faq#x_in_std . |

I disagree with the characterization of

The point is specifically (and it would be helpful if you'd acknowledge that instead of dismissing it as an "edge case") that this flexibility incurs a cost, in the form of more allocations and indirections. The generic queue implementation is slower. And FTR, I predict that generics won't change that either (though we'll have to see that once they are implemented). |

|

At the least, this proposal should be marked as "on hold for generics". But really I think this should be done outside the standard library and prove its necessity first. We have not been creating new packages from scratch in the standard library for quite some time. |

|

@rsc I agree we should wait for the generics discussion to take place. In the meantime, I'll try to validate the proposed solution as much as possible by pinging golang-nuts, making a proper external package, etc. The biggest problem about exposing the queue as an external package only is how to bring awareness about it. Marketing is a gigantic problem that I can't solve by myself. It will probably take years before the package really takes off and people start using it and report their experiences. In any case, I'll keep an eye and try to join the generics discussion as well. Thanks for taking a look at the proposal! |

|

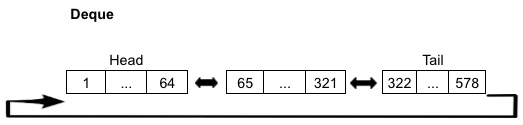

Given the many suggestions, I have built a new queue and deployed it as a proper package. The new queue is based on impl7 and is actually a deque now. Appreciate the suggestions (@phf, @bcmills) as a deque is indeed a much more flexible data structure that can be used not only as a FIFO queue, but also as LIFO stack and as a deque with mixed push/pop. The new deque is a considerably more advanced data structure than impl7 due to the fact is now a deque and also because it has a new design. The new design leverages the linked slices design by making it an auto shrinking ring. The new design improved impl7 performance and efficiency quite dramatically in most tests, although it is now a bit slower on the fill tests. Refer here for the design details. I have also implemented many new new unit, integration, API and especially, benchmark tests. @egonelbre, much appreciated for the test suggestions and to benchmark cookiejar's queue. Given the need for real world usage data, I need to somehow get people to start using it. I'll do my best to raise awareness about the deque, but this is an area I really need help. If you are using a queue/stack/deque, please consider switching to the new deque. Also if you know someone who is using a queue/stack/deque, please let them know about the deque. Below is a few select tests of deque vs impl7. deque vs impl7 - FIFO queue - fill tests deque vs impl7 - FIFO queue - refill tests Although impl7 marginally outperforms deque on the fill test, deque outperforms impl7 deque vs impl7 - FIFO queue - stable tests Refer here for all benchmark tests. The new package is production ready and I have released its first version. It can be found here. Regarding the suggestions for more tests (here, here) and to gather real world data, @bcmills, @egonelbre, does the new benchmark tests help address your concerns? Regarding the questions whether a FIFO queue is actually useful (here,here), @egonelbre, @rsc, does a deque help address your concerns? Please consider a deque not only can be used as a FIFO queue, but also as a LIFO stack as well as with mixed push/pop. Regarding the concerns whether Go needs a specialized queue or just using a simple slice as a queue is good enough (here), @Merovius, does the new queue performance vs slice helps address your concerns? In the wake of all the new benchmark tests and the much improved deque design, do you still feel like a deque naive implementation that uses a slice such as CustomSliceQueue is the best option? |

I'm an outsider to this conversation, but please take this point: the Go stdlib (or golang.org/x) should not be a marketing mechanism for your code; especially not for its clever implementation. That line of thinking can only decrease the stdlib's technical quality. |

@tv42 , thanks for joining the discussion! I realized (and mentioned) the marketing problem only after it was proposed to build an external queue package and get real, production systems to use it to validate its performance. My point was I need help to raise awareness about the Deque package, so people would consider using it. It was never my intention in the first place to even publish an external package. The goal was to propose a very efficient queue implementation to be added to the standard library. For that, there should be no need to market the dequeue in any shape or form other than propose it here. |

|

Wanted to throw in a use case here as I've been searching for a queue-like solution and none of the existing implementations I can find address it (which has me suspicious I'm thinking about the problem the wrong way, or focused on the wrong solution, but ignoring that for now). Might make sense in an example: Lets say the app is sending messages to a remote system asynchronously. So it sends a number of messages individually (batching handled transparently), and gets an acknowledgement some time in the future of some, or all, of the messages. The app needs to handle things like losing its connection, so it has to hang on to the messages until it receives that acknowledgement. If it does lose the connection, once reestablished, it has to resend them. At first this seems like a pretty straightforward unbounded circular queue. Something that is usually small, but can grow when needed, and can handle frequent push/pop without reallocation. However that difficulty I mentioned is when the connection is reestablished. The app needs to resend the messages, so it has to iterate the queue. But it can't remove messages from the queue until they've been acknowledged. All the queue implementations I've seen (including the one proposed) only allow you to peek at the first item. Meaning that we'd have to send one message and wait for that ack before before proceeding to the next. This can be extremely slow, and without batching, it may never catch back up to real-time. |

|

@phemmer I think you could also remove an element and add it again (to the other end). But also, wouldn't something like |

|

Removing and adding to the end destroys ordering. The connection could die half way through the re-sending, and the message source is still adding its own messages to the queue as well. I've considered using a map like you suggest, but it would mean that when re-sending, I'd have to grab the keys and sort them before re-sending. And as the message source is still adding messages, this may have to be done several times, skipping the ones that are now in flight. Where as with a queue I could just iterate until I reach the end. But it's not a terrible idea. As for why not using a slice, because of the high amount of reallocation, or manual work to prevent re-allocation. As messages are added, and shifted off the front, the slice would either have to be re-allocated, or the contents moved over. But if we don't think the ability to peek at the whole queue is something the proposed solution should address, then this can be dropped. Don't want to hijack this issue for solving my problem. Just wanted to throw the use case out for consideration. |

|

@christianrpetrin If we are going to add container/deque, then it should probably support generics from the very beginning. Perhaps we should also wait for more clarity on how #48287 will affect API design? P.S. I think your work is great and I’m not asking you to rewrite it. I’m only pointing out that it would be a real shame to implement a pre-generics API for a stdlib container. |

|

my implementation https://github.com/alphadose/ZenQ also this -> #52652 |

|

@alphadose this is about implementing an unbounded queue. |

|

Generics are out. Should we remove the "Hold"? This issue discusses too many implementation details. If I understand the proposal correctly, with the current generics design, we are likely to propose a set of APIs as follows? package queue

// Queue implements a FIFO queue.

// The zero value of Queue is an empty queue ready to use.

type Queue[T any] struct

func (q *Queue[T]) Push(v T)

func (q *Queue[T]) Pop() T

func (q *Queue[T]) Len() int

func (q *Queue[T]) Front() T

func (q *Queue[T]) Tail() T

func (q *Queue[T]) Clear() |

|

+1 to the above PS:- it would be even better if we can make the STL thread-safe by default which is not the case in C++ |

|

Per #27935 (comment) this should be done outside the standard library first. Has that happened? |

|

It should have happened since day 1 because the proposal also included an implementation that lives here: https://github.com/christianrpetrin/queue-tests/blob/master/queueimpl7/queueimpl7.go, although it is not a generic version. |

|

A good candidate https://github.com/liyue201/gostl |

|

I added a generic implementation of Benchmark results |

|

Completely agree, @sfllaw! Any first Deque version that makes into the STL should support generics, so I added support for generics in Deque v2.0.0. Check it out! https://github.com/ef-ds/deque I appreciate the kind words! |

|

@changkun , @ianlancetaylor , regarding your comments here and here. @changkun, yes, completely agree we should remove the hold and move forward with the proposal. @ianlancetaylor, yes, a double ended queue, that is a major improvement over the initially porposed FIFO queue, was implemented and released here in 2018. Currently, the released Deque package has over 200 open source repositories importing it in over 400 packages, including some large open source projects such as go-micro, ziti, flow, etc! Unfortunately, GitHub doesn't give me info about private repos importing it, but considering the number of git clones, which sits at almost 150 clones every single day, suggests Deque is likely being used by potentially thousands of private projects as well. Check out below some of the Deque's usage metrics. I believe the proposal should be approved due to below main points:

|

@changkun, regarding the API, Deque's current API is as follow. Should the proposal be approved, I propose we go with this design. However, I'm completely open to review and make changes to either the API design and pretty much anywhere in the source code and documentation should the community agree with the changes. type Deque[T any] struct

func New[T any]() *Deque[T]

func (d *Deque[T]) Init() *Deque[T] // Init initializes or clears deque d.

func (d *Deque[T]) Len() int

func (d *Deque[T]) Front() (T, bool)

func (d *Deque[T]) Back() (T, bool)

func (d *Deque[T]) PushFront(v T)

func (d *Deque[T]) PushBack(v T)

func (d *Deque[T]) PopFront() (T, bool)

func (d *Deque[T]) PopBack() (T, bool)Note: The second, bool result in Front, Back, PopFront and PopBack indicates whether a valid value was returned; if the deque is empty, false will be returned. This follows the same idea used by map (i.e. "value, ok := myMap["key"]") |

|

@alphadose, thanks for bringing light to the GoSTL's Deque. I agree GoSTL is a good deque. I personally really like its segmented design, however, I added GoSTL's deque to the Deque's benchmark tests and it doesn't perform very well against Deque. As an example, check out below the results of the Microservice test. Similar results are observed across all/most tests. Refer here for details. |

@lovromazgon, much appreciated for the contribution to impl7! However, Deque's (which is like impl7's v2) supports generics now and we're proposing to add Deque to STL (instead of impl7) |

|

Note that the next hurdle here for adding container types to the standard library is some plan for iterating over a generic container. We aren't going to add any generic containers to the standard library until that is settled, so that we aren't locked into to an unusual iteration approach. |

|

Sounds good, @ianlancetaylor . What's the current state of the conversation? Is there a proposal for it already? |

|

@christianrpetrin Yes, related discussions are in #54245 and #56413. |

Proposal: Built in support for high performance unbounded queue

Author: Christian Petrin.

Last updated: November 26, 2018

Discussion at: #27935

Design document at https://github.com/golang/proposal/blob/master/design/27935-unbounded-queue-package.md

Abstract

I propose to add a new package, "container/queue", to the standard library to support an in-memory, unbounded, general purpose queue implementation.

Queues in computer science is a very old, well established and well known concept, yet Go doesn't provide a specialized, safe to use, performant and issue free unbounded queue implementation.

Buffered channels provide an excellent option to be used as a queue, but buffered channels are bounded and so doesn't scale to support very large data sets. The same applies for the standard ring package.

The standard list package can be used as the underlying data structure for building unbounded queues, but the performance yielded by this linked list based implementation is not optimal.

Implementing a queue using slices as suggested here is a feasible approach, but the performance yielded by this implementation can be abysmal in some high load scenarios.

Background

Queues that grows dynamically has many uses. As an example, I'm working on a logging system called CloudLogger that sends all logged data to external logging management systems, such as Stackdriver and Cloudwatch. External logging systems typically rate limit how much data their service will accept for a given account and time frame. So in a scenario where the hosting application is logging more data than the logging management system will accept at a given moment, CloudLogger has to queue the extra logs and send them to the logging management system at a pace the system will accept. As there's no telling how much data will have to be queued as it depends on the current traffic, an unbounded, dynamically growing queue is the ideal data structure to be used. Buffered channels in this scenario is not ideal as they have a limit on how much data they will accept, and once that limit has been reached, the producers (routines adding to the channel) start to block, making the adding to the channel operation an "eventual" synchronous process. A fully asynchronous operation in this scenario is highly desirable as logging data should not slow down significantly the hosting application.

Above problem is a problem that, potentially, every system that calls another system faces. And in the cloud and microservices era, this is an extremely common scenario.

Due to the lack of support for built in unbounded queues in Go, Go engineers are left to either:

Both approaches are riddled with pitfalls.

Using external packages, especially in enterprise level software, requires a lot of care as using external, potentially untested and hard to understand code can have unwanted consequences. This problem is made much worse by the fact that, currently, there's no well established and disseminated open source Go queue implementation according to this stackoverflow discussion, this github search for Go queues and Awesome Go.

Building a queue, on the other hand, might sound like a compelling argument, but building efficient, high performant, bug free unbounded queue is a hard job that requires a pretty solid computer science foundation as well a good deal of time to research different design approaches, test different implementations, make sure the code is bug and memory leak free, etc.

In the end what Go engineers have been doing up to this point is building their own queues, which are for the most part inefficient and can have disastrous, yet hidden performance and memory issues. As examples of poorly designed and/or implemented queues, the approaches suggested here and here (among many others), requires linear copy of the internal slice for resizing purposes. Some implementations also has memory issues such as an ever expanding internal slice and memory leaks.

Proposal

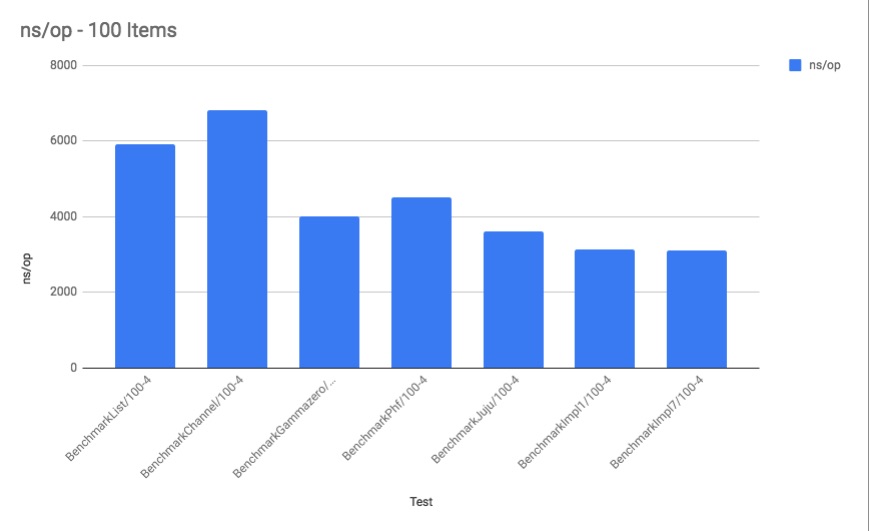

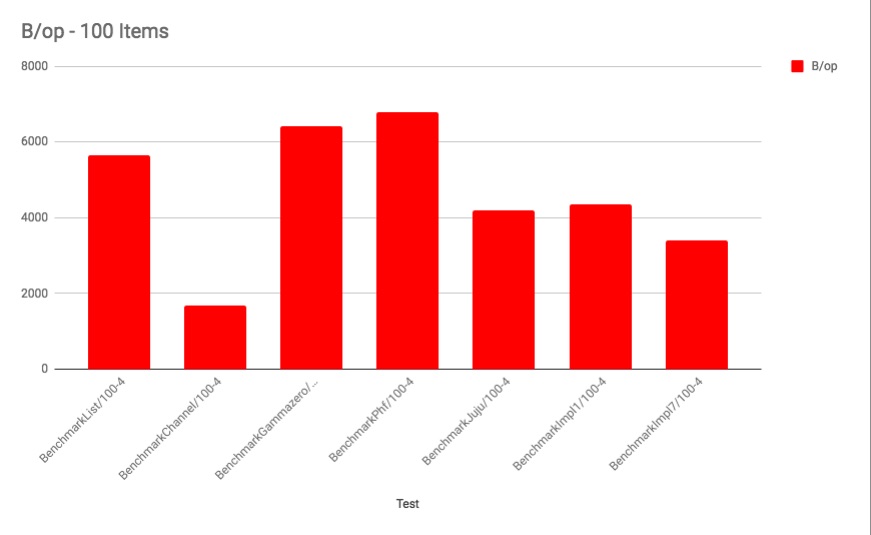

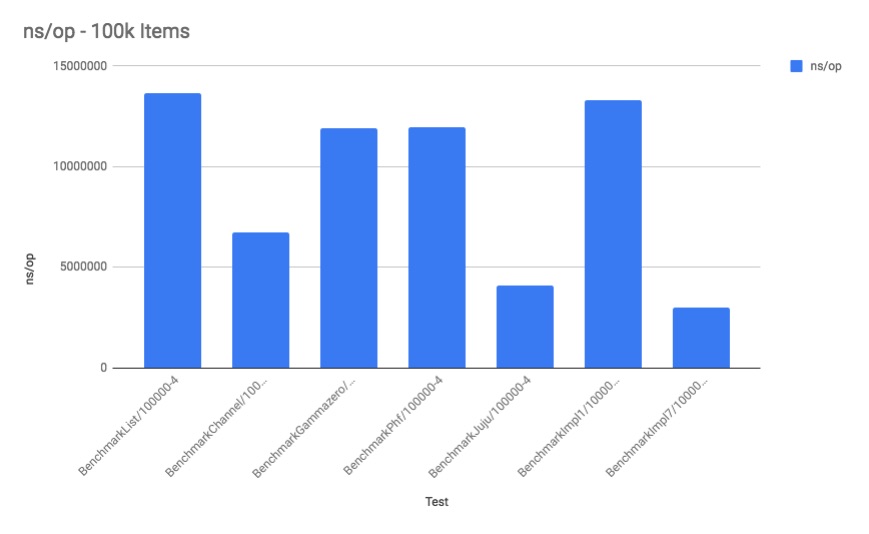

I propose to add a new package, "container/queue", to the standard library to support in-memory unbounded queues. The proposed queue implementation offers excellent performance and very low memory consumption when comparing it to three promising open source implementations (gammazero, phf and juju); to use Go channels as queue; the standard list package as a queue as well as six other experimental queue implementations.

The proposed queue implementation offers the most balanced approach to performance given different loads, being significantly faster and still uses less memory than every other queue implementation in the tests.

The closest data structure Go has to offer for building dynamically growing queues for large data sets is the standard list package. When comparing the proposed solution to using the list package as an unbounded queue (refer to "BenchmarkList"), the proposed solution is consistently faster than using the list package as a queue as well as displaying a much lower memory footprint.

Reasoning

There's two well accepted approaches to implementing queues when in comes to the queue underlying data structure:

Linked list as the underlying data structure for an unbounded queue has the advantage of scaling efficiently when the underlying data structure needs to grow to accommodate more values. This is due to the fact that the existing elements doesn't need to be repositioned or copied around when the queue needs to grow.

However, there's a few concerns with this approach:

performance

On the other hand, using a slice as the underlying data structure for unbounded queues has the advantage of very good memory locality, making retrieval of values faster when comparing to linked lists. Also an "alloc more than needed right now" approach can easily be implemented with slices.

However, when the slice needs to expand to accommodate new values, a well adopted strategy is to allocate a new, larger slice, copy over all elements from the previous slice into the new one and use the new one to add the new elements.

The problem with this approach is the obvious need to copy all the values from the older, small slice, into the new one, yielding a poor performance when the amount of values that need copying are fairly large.

Another potential problem is a theoretical lower limit on how much data they can hold as slices, like arrays, have to allocate its specified positions in sequential memory addresses, so the maximum number of items the queue would ever be able to hold is the maximum size a slice can be allocated on that particular system at any given moment. Due to modern memory management techniques such as virtual memory and paging, this is a very hard scenario to corroborate thru practical testing.

Nonetheless, this approach doesn't scale well with large data sets.

Having said that, there's a third, newer approach to implementing unbounded queues: use fixed size linked slices as the underlying data structure.

The fixed size linked slices approach is a hybrid between the first two, providing good memory locality arrays have alongside the efficient growing mechanism linked lists offer. It is also not limited on the maximum size a slice can be allocated, being able to hold and deal efficiently with a theoretical much larger amount of data than pure slice based implementations.

Rationale

Research

A first implementation of the new design was built.

The benchmark tests showed the new design was very promising, so I decided to research about other possible queue designs and implementations with the goal to improve the first design and implementation.

As part of the research to identify the best possible queue designs and implementations, I implemented and probed a total of 7 experimental queue implementations. Below are a few of the most interesting ones.

Also as part of the research, I investigated and probed below open source queue implementations as well.

The standard list package as well as buffered channels were probed as well.

Benchmark Results

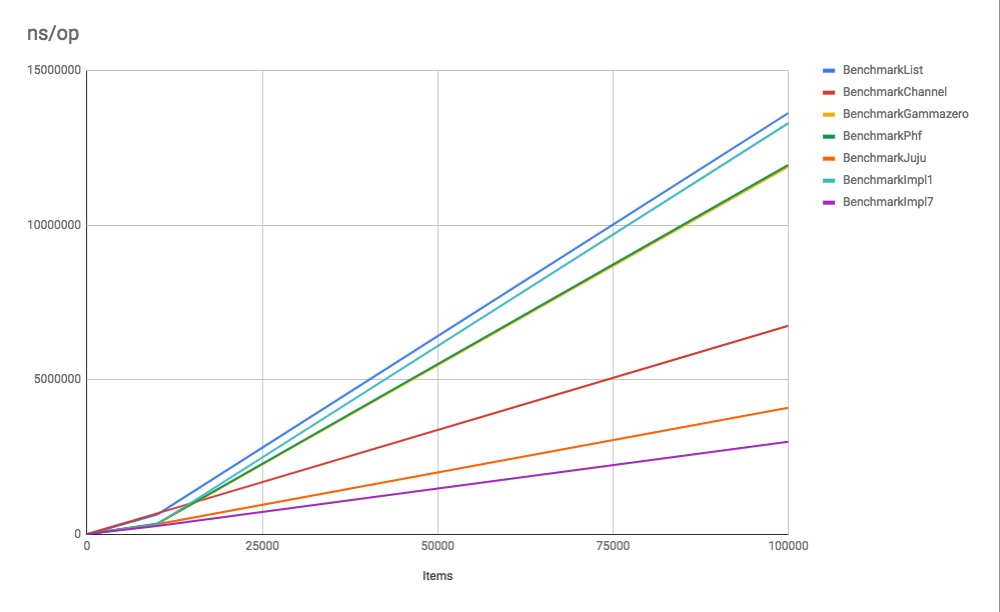

Add and remove 100 items

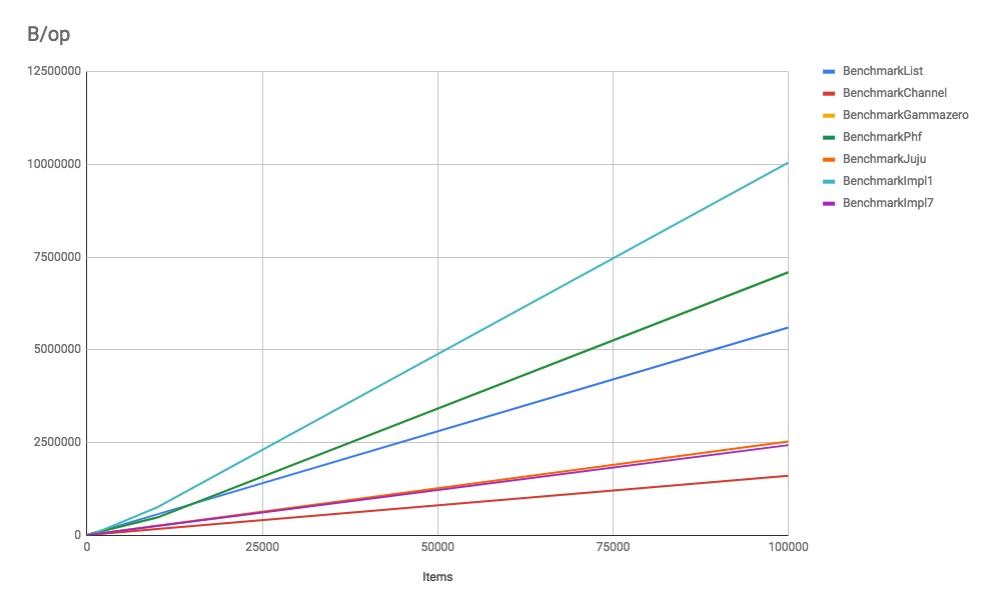

Performance

Memory

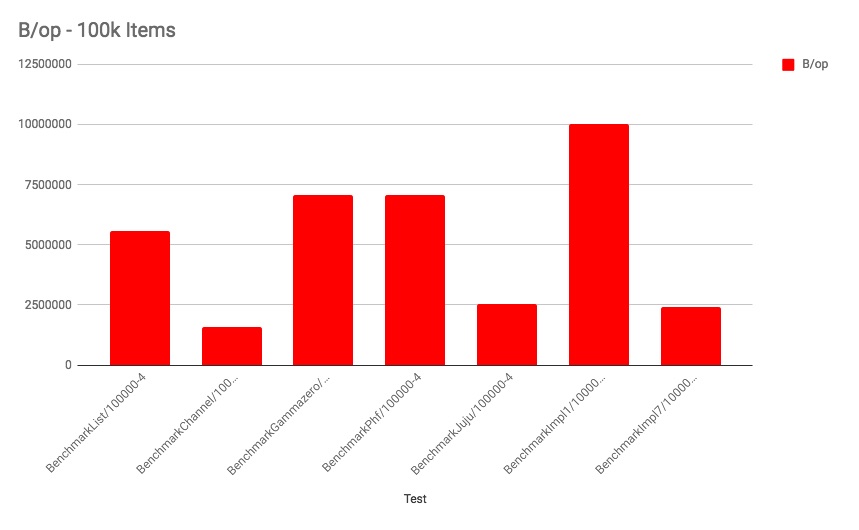

Add and remove 100k items

Performance

Memory

Aggregated Results

Performance

Memory

Detailed, curated results can be found here

Aggregated, curated results can be found here

Given above results, queueimpl7, henceforth just "impl7", proved to be the most balanced implementation, being either faster or very competitive in all test scenarios from a performance and memory perspective.

Refer here for more details about the tests.

The benchmark tests can be found here.

Impl7 Design and Implementation

Impl7 was the result of the observation that some slice based implementations such as queueimpl1 and

queueimpl2 offers phenomenal performance when the queue is used with small data sets.

For instance, comparing queueimpl3 (very simple linked slice implementation) with queueimpl1 (very simple slice based implementation), the results at adding 0 (init time only), 1 and 10 items are very favorable for impl1, from a performance and memory perspective.

Impl7 is a hybrid experiment between using a simple slice based queue implementation for small data sets and the fixed size linked slice approach for large data sets, which is an approach that scales really well, offering really good performance for small and large data sets.

The implementation starts by lazily creating the first slice to hold the first values added to the queue.

The very first created slice is created with capacity 1. The implementation allows the builtin append function to dynamically resize the slice up to 16 (maxFirstSliceSize) positions. After that it reverts to creating fixed size 128 position slices, which offers the best performance for data sets above 16 items.

16 items was chosen as this seems to provide the best balanced performance for small and large data sets according to the array size benchmark tests. Above 16 items, growing the slice means allocating a new, larger one and copying all 16 elements from the previous slice into the new one. The append function phenomenal performance can only compensate for the added copying of elements if the data set is very small, no more than 8 items in the benchmark tests. For above 8 items, the fixed size slice approach is consistently faster and uses less memory, where 128 sized slices are allocated and linked together when the data structure needs to scale to accommodate new values.

Why 16? Why not 15 or 14?

The builtin append function, as of "go1.11 darwin/amd64", seems to double the slice size every time it needs to allocate a new one.

Since the append function will resize the slice from 8 to 16 positions, it makes sense to use all 16 already allocated positions before switching to the fixed size slices approach.

Design Considerations

Impl7 uses linked slices as its underlying data structure.

The reason for the choice comes from two main observations of slice based queues:

To help clarify the scenario, below is what happens when a slice based queue that already holds, say 1bi items, needs to expand to accommodate a new item.

Slice based implementation

The same scenario for impl7 plays out like below.

Impl7

Impl7 never copies data around, but slice based ones do, and if the data set is large, it doesn't matter how fast the copying algorithm is. The copying has to be done and will take some time.

The decision to use linked slices was also the result of the observation that slices goes to great length to provide predictive, indexed positions. A hash table, for instance, absolutely need this property, but not a queue. So impl7 completely gives up this property and focus on what really matters: add to end, retrieve from head. No copying around and repositioning of elements is needed for that. So when a slice goes to great length to provide that functionality, the whole work of allocating new arrays, copying data around is all wasted work. None of that is necessary. And this work costs dearly for large data sets as observed in the tests.

Impl7 Benchmark Results

Below compares impl7 with a few selected implementations.

The tests name are formatted given below.

Examples:

Standard list used as a FIFO queue vs impl7.

Impl7 is:

impl1 (simple slice based queue implementaion) vs impl7.

Impl7 is:

It's important to note that the performance and memory gains for impl7 is exponential like the larger the data set is due to the fact slice based implementations doesn't scale well, paying a higher and higher price, performance and memory wise, every time it needs to scale to accommodate an ever

expanding data set.

phf (slice, ring based FIFO queue implementation) vs impl7.

Impl7 is:

Buffered channel vs impl7.

Impl7 is:

Above is not really a fair comparison as standard buffered channels doesn't scale (at all) and they are meant for routine synchronization. Nonetheless, they can and make for an excellent bounded FIFO queue option. Still, impl7 is consistently faster than channels across the board, but uses considerably more memory than channels.

Given its excellent performance under all scenarios, the hybrid approach impl7 seems to be the ideal candidate for a high performance, low memory footprint general purpose FIFO queue.

For above reasons, I propose to port impl7 to the standard library.

All raw benchmark results can be found here.

Internal Slice Size

Impl7 uses linked slices as its underlying data structure.

The size of the internal slice does influence performance and memory consumption significantly.

According to the internal slice size bench tests, larger internal slice sizes yields better performance and lower memory footprint. However, the gains diminishes dramatically as the slice size increases.

Below are a few interesting results from the benchmark tests.

Given the fact that larger internal slices also means potentially more unused memory in some scenarios, 128 seems to be the perfect balance between performance and worst case scenario for memory footprint.

Full results can be found here.

API

Impl7 implements below API methods.

As nil values are considered valid queue values, similarly to the map data structure, "Front" and "Pop" returns a second bool parameter to indicate whether the returned value is valid and whether the queue is empty or not.

The reason for above method names and signatures are the need to keep compatibility with existing Go data structures such as the list, ring and heap packages.

Below are the method names used by the existing list, ring and heap Go data structures, as well as the new proposed queue.

For comparison purposes, below are the method names for C++, Java and C# for their queue implementation.

Drawbacks

The biggest drawback of the proposed implementation is the potentially extra allocated but not used memory in its head and tail slices.

This scenario realizes when exactly 17 items are added to the queue, causing the creation of a full sized internal slice of 128 positions. Initially only the first element in this new slice is used to store the added value. All the other 127 elements are already allocated, but not used.

The worst case scenario realizes when exactly 145 items are added to the queue and 143 items are removed. This causes the queue struct to hold a 128-sized slice as its head slice, but only the last element is actually used. Similarly, the queue struct will hold a separate 128-sized slice as its tail slice, but only the first position in that slice is being used.

Above code was run on Go version "go1.11 darwin/amd64".

Open Questions/Issues

Should this be a deque (double-ended queue) implementation instead? The deque could be used as a stack as well, but it would make more sense to have a queue and stack implementations (like most mainstream languages have) instead of a deque that can be used as a stack (confusing). Stack is a very important computer science data structure as well and so I believe Go should have a specialized implementation for it as well (given the specialized implementation offers real value to the users and not just a "nice" named interface and methods).

Should "Pop" and "Front" return only the value instead of the value and a second bool parameter (which indicates whether the queue is empty or not)? The implication of the change is adding nil values wouldn't be valid anymore so "Pop" and "Front" would return nil when the queue is empty. Panic should be avoided in libraries.

The memory footprint for a 128 sized internal slice causes, in the worst case scenario, a 2040 bytes of memory allocated (on a 64bit system) but not used. Switching to 64 means roughly half the memory would be used with a slight ~2.89% performance drop (252813ns vs 260137ns). The extra memory footprint is not worth the extra performance gain is a very good point to make. Should we change this value to 64 or maybe make it configurable?

Should we also provide a safe for concurrent use implementation? A specialized implementation that would rely on atomic operations to update its internal indices and length could offer a much better performance when comparing to a similar implementation that relies on a mutex.

With the impending release of generics, should we wait to release the new queue package once the new generics framework is released?

Should we implement support for the range keyword for the new queue? It could be done in a generic way so other data structures could also benefit from this feature. For now, IMO, this is a topic for another proposal/discussion.

Summary

I propose to add a new package, "container/queue", to the standard library to support an in-memory, unbounded, general purpose queue implementation.

I feel strongly this proposal should be accepted due to below reasons.

Update 11/26/2018.

Due to many suggestions to make the queue a deque and to deploy it as a proper external package, the deque package was built and deployed here. The proposal now is to add the deque package to the standard library instead of impl7. Refer here for details.

The text was updated successfully, but these errors were encountered: